Fouaz Bouguerra

on 30 November 2021

Data centre networking: what is OVS?

This blog post is part of our data centre networking series:

- Data centre networking : What is SDN

- Data centre networking : SDN fundamentals

- Data centre networking : SDDC

- Data centre networking : What is OVS

- Data centre networking : What is OVN

- Data centre networking : SmartNICs

In one of our preceding blogs, we spoke about Software-Defined Networking (SDN) and the key drivers behind it. Virtualisation is one of the fundamental aspects that characterises SDN, and has influenced the architecture of network switching in the data centre. OVS (Open vSwitch) is a fundamental component of modern and open data centre SDNs, where it aggregates all the virtual machines at the server hypervisor layer. It represents the ingress point for all the traffic exiting VMs, and can be used to forward traffic between multiple virtual network functions in the form of service chains. Let’s take a closer look in order to understand what OVS is.

What is OVS?

Open vSwitch is an open source, virtual multilayer software switch that can be run in virtual machine environments. It is licensed under the open source Apache 2 license. It provides access to the virtual networking layer with standard control and visibility interfaces, and enables distribution across multiple physical servers. Open vSwitch supports multiple Linux-based virtualisation technologies, including Xen/XenServer, KVM, and VirtualBox.

Open vSwitch code is written in C, and provides support for forwarding layer abstraction to different software and hardware platforms. The current release of Open vSwitch supports the following key features:

- High-performance forwarding using a Linux kernel module

- Standard 802.1Q VLAN model with trunk and access ports

- NIC bonding with or without LACP on upstream switch

- OpenFlow protocols, NetFlow, sFlow(R), and mirroring for increased visibility

- QoS (Quality of Service) configuration, plus policing

- Multiple tunneling protocols like Geneve, GRE, VXLAN, STT, and LISP

- 802.1ag connectivity fault management

- Transactional configuration database with C and Python bindings

What are Open vSwitch benefits?

Virtual environments are often characterised by mobility and high-rates of change, which creates a number of challenges on configuration and management levels. The following characteristics and considerations help Open vSwitch bring answers to such requirements.

In a modern data data centre infrastructure, workloads and virtual machines are no longer static, and can be highly dynamic in large and dense server clusters. It is important to keep track of all the virtual entities’ network states in a given infrastructure to make them easily identifiable and migratable between different hosts when needed. The network state can be any information like traditional “soft state”, such as an entry in an L2 TCAM table, L3 forwarding state, policy routing state, ACLs, or a monitoring configuration (e.g. NetFlow, IPFIX, sFlow).

Open vSwitch has capabilities that can be leveraged to provide insight and deep traffic visibility. It can be used in a model-driven networking approach to allow a network control system to respond to environment changes with the support of simple accounting and monitoring protocols such as NetFlow, IPFIX, and sFlow. For inventory management and observability purposes, Open vSwitch has a network state database (OVSDB) that can support remote triggers from a network orchestrator to track VM migrations and states.

With SDN and VNF being at the heart of modern data centre architectures, Open vSwitch, seen as a network function, can be an entity of an SDN estate driven directly by an SDN Controller or an OpenStack plug-in like Neutron.

OVS Components

The main components that an OVS distribution provides are:

- ovs-vswitchd, a daemon that implements and controls the switch on the local machine, along with a companion Linux kernel module for flow-based switching. This daemon performs a lookup for the configuration data from the database server to set up the data paths.

- ovsdb-server, a lightweight database server that ovs-vswitchd queries to obtain its configuration, which includes the interface, the flow content, and the Vlans. It provides RPC interfaces to the vswitch databases.

- ovs-dpctl, a tool for configuring the switch kernel module and controlling the forwarding rules.

- ovs-vsctl, a utility for querying and updating the configuration of ovs-vswitchd. It updates the index in ovsdb-server.

- Ovs-appctl, is mainly a utility that sends commands to running Open vSwitch daemons (usually not used).

- Scripts and specs for building RPMs for Citrix XenServer and Red Hat Enterprise Linux. The XenServer RPMs allow Open vSwitch to be installed on a Citrix XenServer host as a drop-in replacement for its switch, with additional functionality.

Open vSwitch also provides some supporting tools:

- ovs-ofctl, a utility for querying and controlling OpenFlow switches and controllers.

- ovs-pki, a utility for creating and managing the public-key infrastructure for OpenFlow switches.

- ovs-testcontroller, a simple OpenFlow controller that may be useful for testing (though not for production).

- A patch to tcpdump that enables it to parse OpenFlow messages.

- Ovsdbmonitor, a graphical utility to display the data stored in ovsdb-server.

Different implementations of OVS

When building software-defined networks, an SDN controller is required to take care of the high level network policies to define things like how virtual networks should communicate with each other, and which flows should be permitted or blocked. OVS is the component that enforces these policies via OpenFlow.

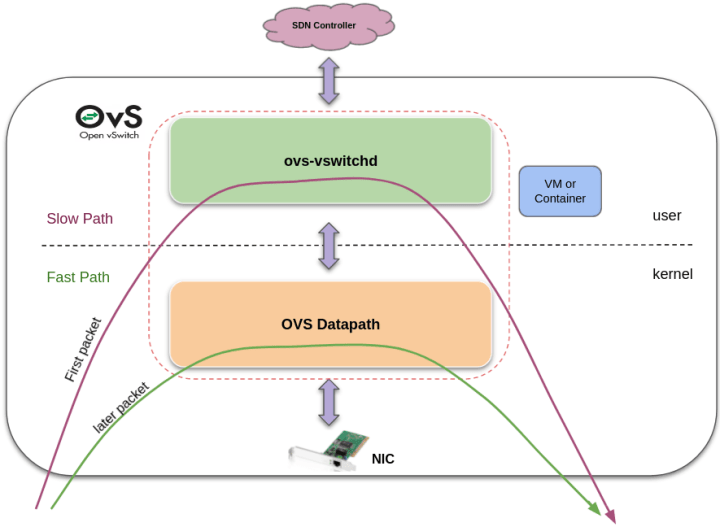

The classic OVS design and implementation make reference to two main components, which reside separately in the user and kernel spaces. OVS-VSWITCHD resides within the operating system’s user space, and is usually referred to as the “Slow path”. The other one, OVS Datapath, is the “Fast path” when traffic flows traverse OVS.

When the first packet enters OVS, it is checked against the OVS flow table and a cache entry is inserted into the datapath (Here we say that it takes the “slow path”). The latter packets go directly to the datapath (which is the “fast path”).

In the early days of OVS – before we had DPDK or Netmap – the OVS Kernel module was the most performant way to do packet I/O. Classic OVS represents low operability in case an upgrade or bug fixes need to be handled. Developers need to do those operations on both the kernel and OVS levels, where they need permissions from their respective communities.

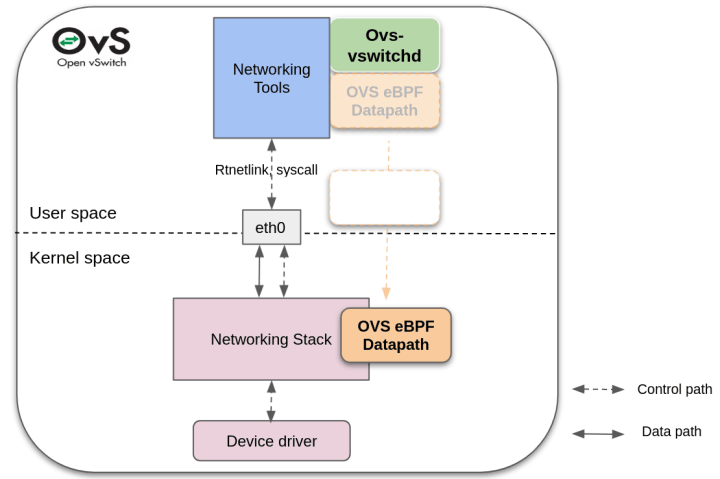

OVS with eBPF: eBPF is a Linux kernel technology that allows pushing eBPF code into the linux kernel and provides code safety verification. The OVS datapath is written in an eBPF implementation, which is pushed into the kernel to do the processing at the runtime.

This OVS implementation offers better maintainability to developers, as they can easily add new features and benefit from the eBPF community. When an OVS upgrade or bug fixes are needed, they can be done at the eBPF datapath level without disrupting the kernel and its networking tools such as ifconfig or tcpdump. It is nevertheless worth mentioning that it can show 10–20% of traffic forwarding performance degradation.

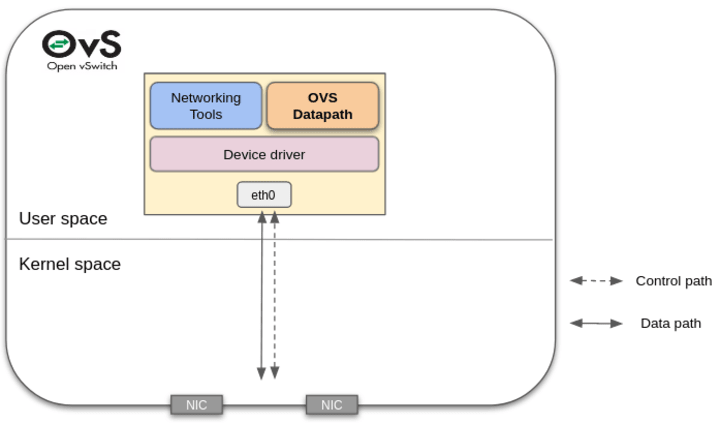

OVS with DPDK: With the advent of DPDK in 2010 and its first integration into OVS in 2014, it became possible to leverage the DPDK kernel bypass library to build OVS datapaths. The drivers and networking tools also use the DPDK library. Today, this is still a viable implementation and has since seen continuous evaluation and different setups.

This OVS implementation shows very good and close to line-rate performance. It can serve the multitude of high performance network appliance use cases, and can scale OVS performance significantly when multiple cores are used. It also provides good maintainability and a limited dependency on the OVS community. The drawback that operators need to work with is to manage two separate network configurations on DPDK and the kernel sides. The existing networking tools like tcpdump might have limitations to work on DPDK.

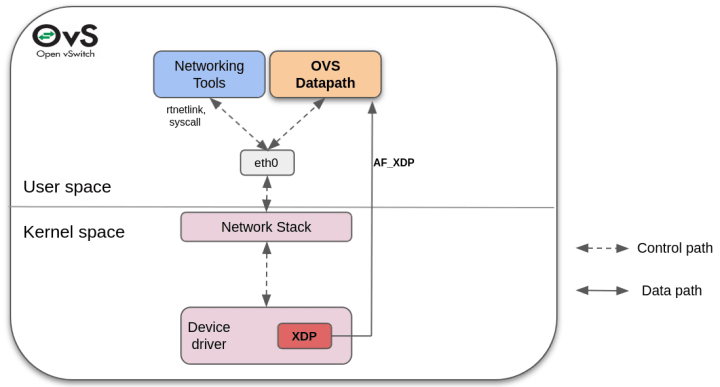

OVS with AF_XDP: This new model provides userspace datapath with AF_XDP. It was introduced to address core limitations of previous configurations and improve traffic forwarding experience.

In this implementation, the OVS datapath remains in the userspace, but the device driver is run inside the Linux kernel. The new kernels come with AF_XDP sockets, which allow running a fast and small XDP program in the device driver with an early access to the packets. Selective kernel bypass can be enabled for designated packets by sending them directly to the OVS datapath via an AF_XDP socket. It is also possible to send specific or management traffic through the kernel and the network stack, where the network tools continue to work properly, and the device driver remains in the kernel space.

This OVS with XDP implementation offers fast and good packet processing performance, comparable to DPDK’s performance. Maintenance and operability are easy to perform, and the traditional networking tools remain fully interoperable and operational.

Even if the traffic processing performance of OVS with XDP can still be further increased, it already provides a space to improve the developer experience. It has an easier patching, with CI/CD being natively incorporated in cross platform designs.

Conclusion

Open vSwitch focuses on automation and dynamic control for large open source networking environments. One of its important goals is its emphasis on high performance when leveraging the Linux kernel components. Protecting as much as possible the reusability of the existing networking tools and functions (like ifconfig, tcpdump and QoS), is another base guideline of OVS.

For more detailed information, refer to the OVS project home page.

What is next?

The next blog will be dedicated to OVN and how it extends the SDN control plane to OVS switches in an open data centre networking stack.