Bartłomiej Poniecki-Klotz

on 10 February 2022

Deploying Kubeflow Pipelines with Azure AKS spot instances

Introduction

Charmed Kubeflow is an MLOps platform from Canonical, designed to improve the lives of data engineers and data scientists by delivering an end-to-end solution for AM/ML model ideation, training, release and maintenance, from concept to production. As a result, Charmed Kubeflow includes Kubeflow Pipelines, an engine for orchestrating MLOps workflows such as feature engineering, deep learning model training, experiments and release of model artifacts to production.

In this blog post, you will learn how to leverage Azure spot instances to optimize the cost of Charmed Kubeflow based MLOps workflows running on the Microsoft Azure cloud.

Spot instances

The Spot instance feature is a cost-saving way of running virtual machines in the cloud. The cloud providers like Azure offers spot instance using their spare capacity. If they are the same as on-demand instances but a lot cheaper, everyone should always use them, right?! The cloud providers can evict the user workload when they need this compute capacity. They provide a new instance based on spot configuration, but the workload will be interrupted. Based on the instance type this happens between 1% to 20% of the time.

Spot instance prices change based on the amount of spare capacity that is available for a given instance type. When a lot of people request the same instance type, the cost of a spot instance can be equal to the on-demand price in a region. Therefore, we can define the maximum price we want to pay. When the spot price exceeds this value, the spot instance will be evicted.

MLOps Workflows

Based on the above features, the best candidates to run on spot instances are workloads that are not time-sensitive and will not be impacted by eviction. Additionally, we can use spot instances for short tests and experiments with expensive hardware when stability is less important than cost.

In ML Workflows, there are a few good candidates for running on spot instances:

- Data processing

- Distributed training and hyperparameter tuning

- Model training (with checkpointing if it takes a long time)

- Batch inference

It is not recommended to use spot instances for:

- Kubernetes control plane

- Notebooks and dashboards

- Datastores like Minio or databases

- Model serving for online inference

AKS – Azure Kubernetes Service

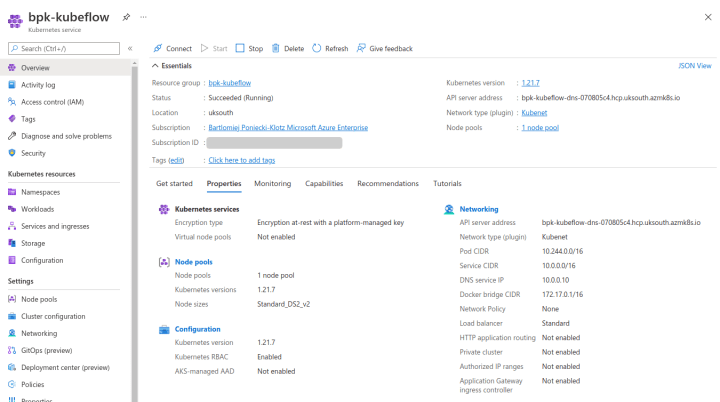

AKS is a managed Kubernetes service in Azure. It is backed by groups of VMs called node pools. All instances in the node pool are of the same type. In order to create a cluster, you need to have at least one pool with on-demand or reserved instances to run the Kubernetes control plane. We can add spot instances later as additional node pools.

Adding an additional node pool is only the first step to handle applications correctly in an AKS cluster. Nodes backed by a spot instance have a property called taint. A given pod needs a tolerance property to be scheduled on a given node. To schedule a Pod on the spot instance nodes in AKS, we need to configure the tolerance of the pod according to the taint value.

Example

Is it really worth the hassle? Let’s assume that we have an MLOps workflow implemented in Charmed Kubeflow on AKS for 20 different models. To begin with, we used only a single machine type – D4s_v3, which is a general-purpose machine. For the simplicity of calculation, we will assume that we do not change the instance type.

Assumptions per month:

- 400h models training

- 1000h experiment runs

- 20 models endpoints exposed for online inference

- 3 TB of data

- 2000 hours preprocessing data for ML

First, we identify the workflows that can use the spot instance:

- Data processing

- Experiment runs

- Model training

This gives us a total of 3400 hours of computing on D4s_v3. At the time of writing, Azure’s published price for an on-demand instance is $0.2320 per hour. For the month it is around $788 (UK South region).

Spot instances can be evicted at any time. Azure publishes the estimated rate of eviction per instance type. The eviction rate for instance type D4s_v3 equals 5%. This will add additional double time for 5% of workloads. Total processing time will increase to 3570 hours. The currently published spot instance price in the UK South is $0.0487 per hour, which makes it around $173 per month (UK South region).

The difference between the two approaches is $788 and $173 per month, 78% less for data processing, experiment runs and model training. It seems it is indeed worth the hassle to set this up!

Let’s add a spot instance to the running system

We are going to extend the existing AKS cluster with spot instances and allow the orchestration engine to schedule workflow steps on them. We divided the implementation into 3 parts:

- adding a new node pool to the AKS cluster

- changes to the workflow definition

- workflows execution and result verification.

Preparations

You need a working Kubeflow deployed on AKS to follow the implementation. How to set up Kubeflow on an AKS cluster can be found here – https://ubuntu.com/tutorials/install-kubeflow-on-azure-kubernetes-service-aks. The Kubeflow version used is 1.4

New node pool

In the cluster, details screen go to Settings -> Node pools. We have only a single agentpool that hosts Juju and the Charmed Kubeflow pods. We want to add an additional node pool that will contain the spot instances. The spot instances will then be configured so that no applications can be accidentally placed there.

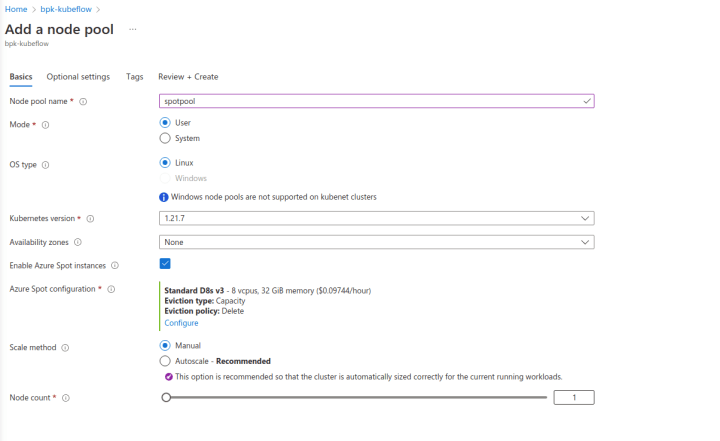

Select the “Add node pool”. After that, go to the “Enable Azure Spot instances” to use spot instances instead of on-demand.

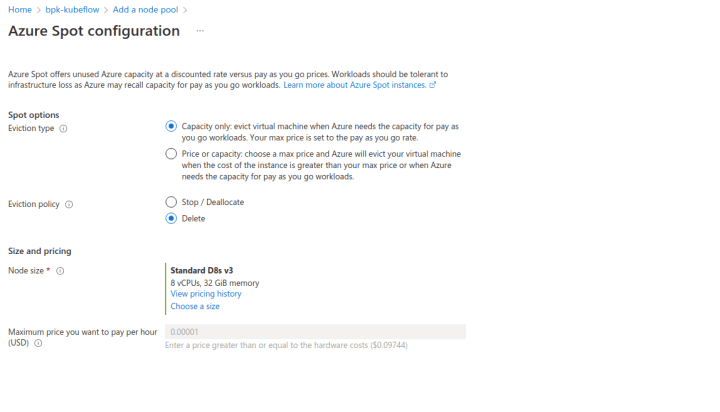

Azure Spot configuration is important. You specify there when your nodes can be evicted and how (“Eviction type” and “Eviction policy” respectively). If your workload has a maximum price over which it is not worth running it – you can put it here.

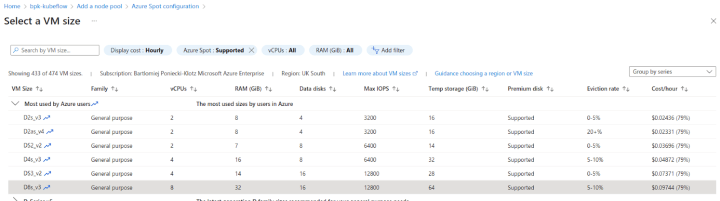

Now it’s time to select the node VM size. The Azure portal shows the current cost per hour with the spot instance discount included. You need to be aware that the prices change dynamically. Additionally, the Eviction rate presented there is not a guaranteed value. All these parameters are important when selecting the VM size and calculating the TCO of using spot instances.

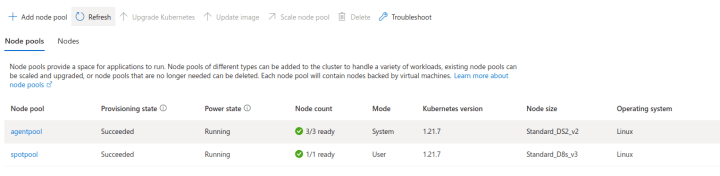

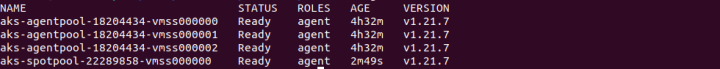

After completing all required fields, go ahead and create the pool. It will take some time for VMs to start up. You can check if all the nodes are available in the Azure portal’s “Node pools” tab or using kubectl

kubectl get nodes

Workflow on on-demand instances

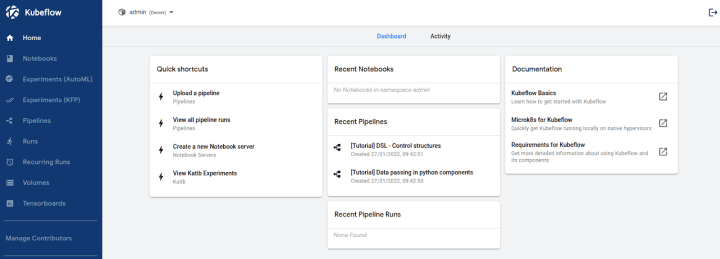

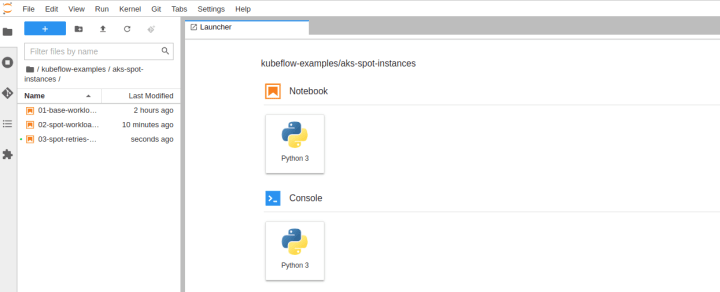

Create a new notebook in the Kubeflow notebooks and clone the repository from: https://github.com/Barteus/kubeflow-examples. Notebooks for this blog post are in the aks-spot-instance folder. You can follow along or run the notebooks from the folder. We have numbered them for easier following.

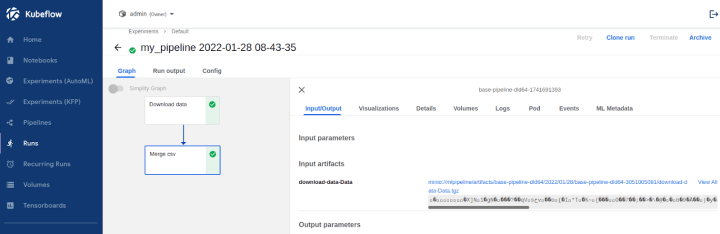

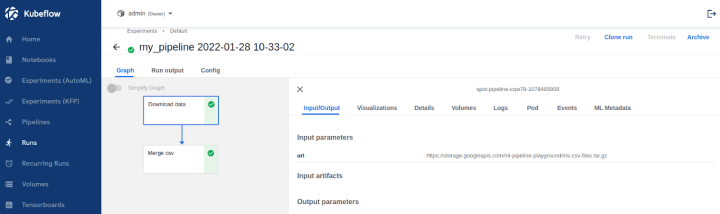

For the first run don’t change anything, open and run the 01-base-workload notebook. Let’s see default Kubeflow Pipelines behaviour. To see the Workflow Run details click on the “Run details” link in the Kubeflow Dashboard and the Kubeflow Pipelines UI will be shown.

We ran a workflow that executed correctly. It downloaded the TGZ file, unpacked it and concatenated all of the CSV files in the package. As expected, the interim results are stored in Minio. Additionally, logs from the Pods and the general state of the Run can be checked in the Kubeflow Pipelines UI. We want to confirm that the scheduler did not place any of the tasks on the spot instance pool node. One way of doing so is to search through the long Pod definition YAML – under the field “nodeName”.

Another is by using the kubectl.

kubectl get pods -n admin -o wideIn the Node column, we can see that all tasks were executed on agentpool. This is expected because we did not allow our tasks to execute on our spot instances pool.

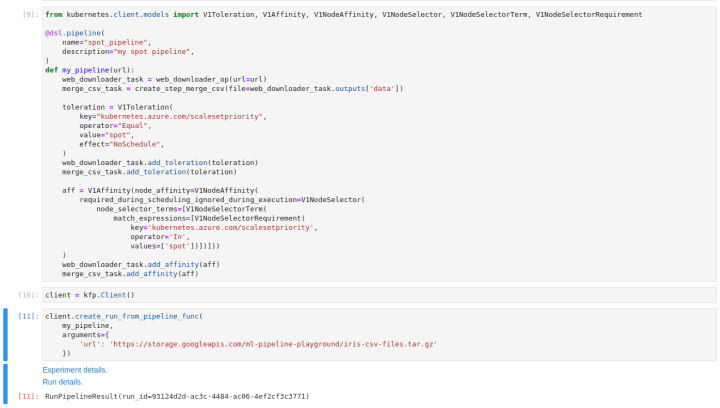

Workflow on spot instances

We want to allow task execution on spot instances. The Kubernetes Toleration mechanism will help with this. By adding proper Toleration to each of the tasks they now will have the ability to be executed on spot instances. We can leave the rest to the scheduler and in most cases, all tasks will be run on spot instances, but we will not have full assurance of that. If we have tasks that must run on a certain type of node (like GPU size required), we need an additional Affinity configuration to enforce which type of pods could be used to execute the tasks.

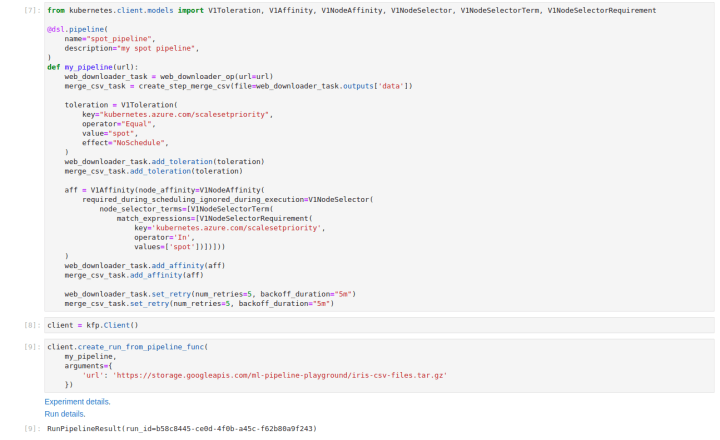

The changes will be done only at the pipeline level. Tasks are completely unaware of enforced Kubernetes configuration details. We modified the previous my_pipeline method with both Toleration and Affinity. Each configuration is set up per task. This gives us some flexibility to run tasks on the most cost-effective node.

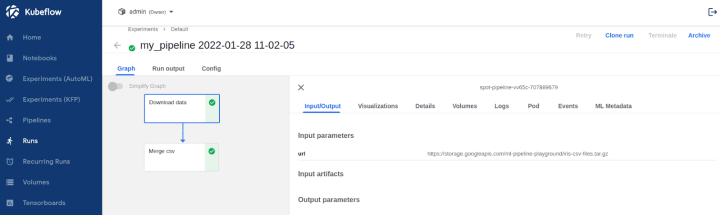

After changes our pipeline works perfectly fine, doing exactly the same thing. The change can be seen in the Pod definition – in the “nodeName” field.

Let’s check on which node the “spot-pipeline” was executed.

kubectl get pods -n admin -o wide | grep "spot-pipeline"

As expected, Affinity and Tolerance worked, both tasks were executed on spot instances.

Graceful Eviction handling

We have a working solution that uses the spot instance to efficiently process data in Kubeflow Pipelines. There is one more thing we need to remember about spot instances, which is Eviction. At any point in time, a node VM can be evicted – and we will need to be able to handle this gracefully. Kubeflow Pipelines has a function called retries which comes to the rescue here. We can set the retry policy for each of the tasks that will be executed on a spot instance. When a spot instance gets terminated and the task fails, Kubeflow Pipelines will wait and then retry the task – it’ll keep doing this until the max retries count is reached.

We have set our tasks to retry up to 5 times and with 5 minutes between attempts to give time for the new node to spawn.

Again the workflow runs perfectly fine. If a spot instance is evicted during the task run, it will retry again in 5 minutes.

You added retries to your Kubeflow Pipeline definition, which will save you from “random” errors in execution. Above all, you will notice the number of such errors increasing for instance types with an eviction rate >20%.

Summary

We walked through the process of adding a new spot instance pool to the AKS cluster, configuring the Kubeflow Pipelines workflow to use spot instances and handle eviction properly.

There are a few things worth emphasizing :

- Spot instances are a cost-effective way of running not time-sensitive workflows in MLOps workflow

- Spot instances can be evicted at any point in time and workflows needs to handle this gracefully

- Jupyter notebooks and datastores should not be placed on spot instances

- Model training, Data preprocessing and batch inferences are great candidates for spot instance utilization

Useful Links

The demo workflow we used is based on https://www.kubeflow.org/docs/components/pipelines/sdk/build-pipeline/